INTERVIEW CYCLING 74

by Gregory Taylor, posted Sep 15, 2008. Original link here. French version here.

It was a gradual process – an acquired taste. I started with Rock music and classical guitar in the 70s, and played all sorts of Jazz in the 80s. After that I made a transition to music heavily influenced by the British Improvisers style.

GT: The easy guess here would be that you’re talking about players like Fred Frith or the late, great Derek Bailey. Am I on target here? If so, it seems like you’re also talking about a pretty large jump away from more traditional forms of Jazz to some very different ideas about form and style and idiosyncracy. Can you talk a little bit about your influences?

As for guitarists, hearing Sonny Sharrock around 1977 screwed me up bigtime. I was listening to Coltrane, and he blew me away with his energy, but the jazz guitarists I heard at that time were just playing pling-plong guitar, that was it. Then someone played the live version of “Memphis Underground” from Herbie Mann’s record “Hold on, I’m coming”, and this guitarist just exploded, banging the guitar with a slide and such…. you could say I somehow discovered extended techniques. Right after that I heard Miles Davis’ “Agharta” at some party, and I tried to get a hold of every Miles record where Pete Cosey was playing. I discovered the British Improviser’s scene on a “Music Improvisation Company” record where Derek was playing.

That transition towards the tabletop-guitar wasn’t that large of a jump, because it took years. Anyway, towards the 90s I lost interest in melodic or harmonic development, and became infatuated with odd sounds. First I experimented with pedals and effects that were available at that time. But I quickly figured that the stompbox-approach wasn’t working for me, and I was way more flexible in musical situations when I used all sorts of metals, sticks, stones and other tools. The sounds I could coax from my guitar were so much richer and more interesting than when I used pedals.

Towards the end of the 90s I regularly had my guitar lying flat on my lap. In my solo concerts – I’ve been playing solo regularly since 1994 – I often had several “voices” going on at the same time. Things like balancing a cymbal on the strings, while playing two independent sounds with my hands – stuff you can hear on my solo CD “Endangered Guitar” from 1998. In addition to that, I did reintroduce pedals into my setup, and inserted them into different channels from various piezo and other pickups situated on my guitars. It was a more and more complex setup, and lent itself to eventually putting everything onto a table. Putting the guitar on a table allowed me to exercise more control over subtle movements, because I didn’t have to balance or hold the guitar anymore. I also could execute certain ideas quicker – my tools were right in front of me, and I’m was simply faster in getting a hold of them or putting them back.

GT: The trajectory you’re describing is interesting – it’s both a kind of story about emergence in terms of searching for more immediate and physical means to do something that interests you, but also this parallel interest in… quickness? lightness? fluidity? You’re looking for ways of maximizing possibility in every way you can. Although it seems like an obvious question, how did Max come into your life?

Well, I married a Max programmer….

GT: This is why you should always ask the obvious questions in an interview!

No, it’s no joke – I discovered Max when I met Dafna Naphtali, who’s worked with Max as a performer, teacher and programmer for some 15 years. Dafna gave me a head start. It also helped that I programmed business applications for small companies in Germany at the end of the 80s. Today I think I was already looking for some interactive element in my solo concerts, but I wasn’t aware of that then. As with so many performers who come from a “traditional” instrument background, I just wanted to rebuild my complex setup within the computer. But as usual in these cases, after a short period of time you’d come up with a million other ideas.

GT: Could you unpack that a little? When you talk about what you were missing in your solo work, what kinds of things – outside of your own interaction with the physical system of the instrument and its treatments your listeners were you looking for? I’m not just asking about how being a programmer fits into this, since I think we’re really talking about something a bit larger – the way you think about improvising in general….

After all, I’m an improviser, and I appreciate the high art of improvisation in other parts of my life as well. But it becomes stale if you just rearrange what you have in your toolbox, like some people playing the same licks in every solo. I think my playing became so controlled that I was looking to add some unforeseeable elements again. It took me a while to figure that out – at the beginning I rejected every notion of randomness in my Max patch, but eventually I introduced a bunch of routines that allow me to be surprised within certain boundaries.

Generally speaking, I do live sound processing of my guitar, and control the patch through my guitar playing. What I play controls the sound that I played before. When the live sound processing gets too crazy, I pull it back – because when it just sounds like a laptop, the guitar is useless. Over the years I became less zealous about making my guitar sounding anything else but a guitar. It’s probably a phase many “experimentalists” go through, and at a certain point you’d ask yourself why you actually schlepp this instrument around. Currently I think that it is not important at all to sound weird – an evil sound doesn’t make the revolution, and a beautiful sound doesn’t bring Hitler back to life.

GT: I guess this is as good a place to ask this question as anywhere: It seems as though there are a couple of generic approaches to using Max that emerge among people who actually become fluent with its use. I’d describe one of those as the “patch as piece” approach, where each major outing requires something new versus the “instrument” approach, where someone creates a larger structure that is then “learned” in the way you’d develop any other set of skills with a more conventional instrument. Where do you see yourself along that continuum?

Certainly among the latter. I very much like your word “learned’ here – yes, this is a new instrument, and it has to be learned. I practice working with new routines, like I practiced scales many years ago.

GT: Have you tried porting your patches to 5 yet? What’s that experience been like, if so?

So far, so good. My Max 4 version is stored away, and I’m performing with Max 5 for quite a while now. I had to make lots of GUI changes, though, because I use every available pixel on my screen – it took a while to adjust the elements.

GT: Can you talk a bit about how your performance patch is organized?

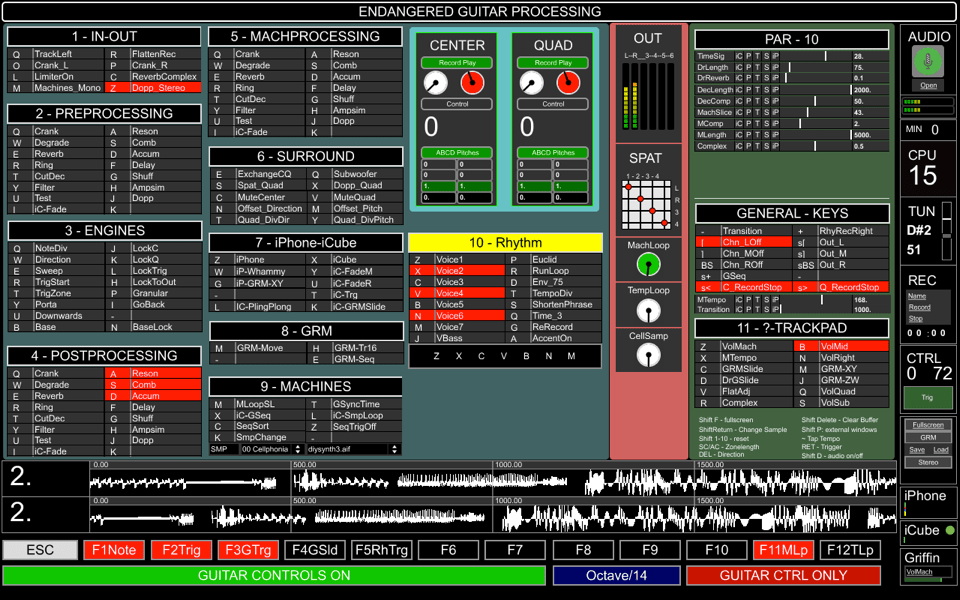

My Max patch consists currently of roughly 160 files. It is constructed in a very modular way, with multiple levels of bpatchers, abstractions and poly~ objects, all arranged by the main patch. The individual patches are often very simple, as soon as I have the feeling I can reuse something later, I make a bpatcher out of it. I use a lot of variables. This allows me to do changes very quickly – when I give a new routine a name, it takes me just a few seconds to completely integrate it into all GUI elements, the preset system, and send internal and external controller data to any parameter. I probably never played two concerts in a row with exactly the same patch – every concert brings a little change here and there, an adjustment, or just putting a routine on another key on the keyboard. Over the years 90% of what I ever wrote I already threw out. If a routine isn’t used in a concert situation, it has to go. That’s not different than practicing scales or working on certain chord progressions – you test out a lot, but if you don’t use it on the next couple of gigs, forget it.The patch really reflects the improvisational nature of my music making, if you want to look at it this way. Music for me has to be in constant flux, every concert, CD, or discussion influences me in ways that immediately effect my next move. As an improviser, you constantly push your own boundaries – the idea of creating something set in stone, played the same every time is foreign to me. Writing a fixed score is obsolete, because it’s outdated as soon as I go to the first rehearsal. When writing for larger ensemble, as I currently do with my 14-piece “Third Eye Orchestra”, I address this fact by creating a large score that has to be arranged by the conductor live every time it’s performed. That’s also probably one of the reasons I ended up using Max – programming yourself is very suitable for this kind of approach.

By the way, Harvestworks, where I work, is soon embarking on a series of research workshops centered around the question how improvisational strategies and digital media tools affect each other, and we plan to present the outcome in a book or a conference in 2009.

GT: Speaking of that, some people may know you by way of your work with the Harvestworks artists’ organization in addition to this giant pile of CDs and list of performances. How did you come to be involved in the work there?

Well, I married a Max programmer….

GT: Would I be correct in thinking I’m detecting a pattern here?

Dafna was working at Harvestworks as a Max teacher and programmer, and when they were looking for someone to run the studios in 2001, she told me. So I applied for the job. It didn’t take long to discover that this is an fun place with a great community. There are constantly students, artist in residence, or interns hanging out in our lab room working on their projects, often coming from several different countries. Things that are going on there are a good inspiration for my own work, you meet all sorts of interesting people, and discuss weirdo projects. Live sound processing of knitting needles, butterfly movements turned into music, a flock of 300 movies flying around the viewer in surround video are just some of the projects my programmers are currently working on.

GT: Despite its great work and profile in the new media universe, I’ll wager that there are some people reading this who aren’t familiar with what Harvestworks is. It might help them to have a clearer idea of how unique an institution it is.

Harvestworks is a non-profit organization, helping artists create their work. It was created in 1977 by two artists, Jerry Lindahl and Greg Kramer. At that time, when you wanted to create an electronic composition, you were either part of a university that had an electronic studio, or you had to lay out a considerable amount of money to buy a synthesizer. But there has to be something for not-so-lucky artists, too!

So these guys went out and created a community center where you could go and rent a Buchla or other equipment for $3/hr to create a piece. Now we have around 250 different clients a year, an artist in residence program, audio and video studios, we’re doing festivals and presentations, program interactive applications with Max/MSP/Jitter, and have a large education program. Max as a software has been around Harvestworks for a long time, actually. A while ago we found an old flyer inviting to some Max User group in 1989. And Dafna has been teaching and programming Max at Harvestworks since 1995. I have currently 7 or 8 people working with my clients, but Zachary Seldess, Matthew Ostrowski and Dafna Naphtali are doing the bulk of the work here.

GT: One of the interesting things about Harvestworks as an entity has been the development of their “Certificate Programs,” Max being one of those programs. I wonder if you could talk a little bit about how that came to be.

The “Certificate” came into being a few years ago. With ever more powerful computers and Max covering now audio and video, artists could go into all directions. You have to have something that addresses the individual needs more than a class situation can do. On top of it, the projects involve often several specialists, so you need someone to bring the right people together, like a project manager.

GT: A good friend of mine says that there’s no such thing as an “intermediate” Max user – merely people who haven’t specialized yet.

He’s right. That’s also why we gave up on “intermediate” classes. What we’re doing instead are classes focusing on special topics, like “multichannel audio- and video spatialization”, “feedback – taming the beast”, “better and faster patch buiding” and things like that. That seems to work better.

GT: It seems like the Max list is always full of beginners who want to do a surprisingly small number of things: build drum machines or loopers or synths, or get their OpenGL torii to throb along with the beat. Since you’re trying to serve a really diverse group of artists, what kinds of other subjects do you see as emergent interests beyond teaching the basics?

We don’t only have audio and video performers, but also many installation artists. If I look at my artists’ needs, today two things stand out – live camera motion tracking, and audio and video spatialization. Just today someone signed up for a Certificate to focus on Ambisonic multichannel audio. We also have lots of camera motion tracking projects for dance, installations and performance as well – that seems to be the hit right now. A few years ago, everybody was into advanced audio analysis and we did lots of FFT stuff, and hybrid instruments like a shakuhachi controller, where we had to analyze the airstream. It is interesting to see how this changes. I have two artists planning to work with GPS or RFID technology, and I’m sure that’s a topic that soon will become more mainstream, too.

GT: Very interesting. I guess I’d presume that this kind of subject material would maybe require a little different relationship than, say, pedagogy about building variations on the drumbox.

Yes, it’s all over the place, and you have to somehow address this. So we came up with the Certificate Program, and it is all in a one-on-one situation. We assess the student’s needs, design an individual curriculum, and the student can learn on their own project. Although the program comes just with 20 hours of these regular tutorials (some people do several of these certificates in a row), you can work any time with experienced Max interns, and work together with other students that are currently around. That way we can make much more out of it than 20 hours of tutorials can give you. At any given time we have 3, 4, or 5 Max certificates running, including lots of artists coming from other states or other countries. I’m sure it’s also an advantage that we’re located in New York…

For those who need basic classes we recently added the full-week 40 hour Max Intensive course to our program. A crash course in basic Max, with a little MSP and Jitter.

GT: How has the arrival of Max 5 changed your pedagogical activities at Harvestworks, if at all?

Max 5 came out shortly before one of our beginner’s Max/MSP Intensive courses, so my teachers had to jump into Max 5 heads-over-heels. All courses are taught in Max 5 now, but there could be one or two [individual] Certificate Students who might still work in Max 4. It’s too early to gauge our experience – certainly many things in Max 5 are a lot easier, but with so many more options available for the student, there is also more need for the teacher to reduce the complexity.

GT: I’ll pause before asking this next question and I’ll wait while you put on your “Harvestworks Propellor Beanie”: What kinds of sensor solutions do you find yourselves working with at Harvestworks, and are there any kinds of general questions that drive the choices your clients and students make?

To my clients I usually explain the extremes – the Arduino as the by far cheapest device, but you have to do a lot to make it work. On the other end of the spectrum is the iCube family from InfusionSystems. It costs far more than an Arduino board, but is practically plug and play. You can place most other devices somewhere in between those extremes. If you like to solder and you have a little place to do it and you enjoy the fun of figuring out how to program an Arduino, do it. If you don’t want to solder, get an Infusionsystems interface and just plug it in and go.

If you can do at least some assembly yourself, you can look into other devices that are more affordable. Miditron, Eobody, Making Things – they’re all good. There are also other issues that can be important such as networking possibilities or the speed of the data transmission. If you need a very fast interface, then any MIDI-based device such as Eric Singer’s Miditron might not be the right choice. However, I haven’t seen that many projects that demand a faster connection than MIDI.

And don’t forget one thing to think about… you should do what your friends are doing. Those are the people that you can ask for help – and you might be able to call them up at 11pm with a question. Sorry to say, but Harvestworks is closed at that time.

GT: Looking at the website, I noticed that your discussion of the Max Certificates specifically mentions working with sensors. In fact, it’s really the only non-Max-specific thing mentioned.

It’s just because so many people ask for it. The majority of students has some project in mind that involves sensors. However, not every project needs them. In recent years many of the sensor projects that came in were done cheaper and easier with camera tracking.

GT: So often, questions about working with external hardware in general and sensors in particular are often matters of Max list personal endorsements. What kinds of sensor technologies do you work with as a performer?

I experimented for years with sensors, but until last year I was never successful in adding a new quality to the music. But I do use a proximity sensor, sitting right in front of my guitar. It’s actually funny how it came up – when I’m playing I often wave my hands around, and on numerous occasions people asked me what kind of sensor I was controlling with my hands. And at the 2007 NIME conference it just came to my mind to use exactly those movements to control certain parameters. Since I’m lazy, I bought a “microDig” from InfusionSystems – it took me literally 5 minutes to set it up and I’ve performed with it since then.

The outcome was different, though – those hand movements didn’t work out musically and it turned out to be much more effective to situate the sensor on the table right in front of me. A slight movement of my head into the beam allows me to control a parameter, or to switch something on/off. That way, when I use both hands to create two voices, a move of my head can set up a third voice. What’s nice about it is that you don’t see how I control it, because very small movements are sufficient.

GT: Despite your Harvestworks life, you’ve obviously continued to keep a busy schedule in terms of performing and recording, and that – like lots of musicians – there’s a gap between what you’re doing at the moment versus those things we can download or pick up as recordings since we don’t live in or near Manhattan. That said, what are you working on now?

I’m working on a CD with my trio Die Schrauber right now. It’s a trio with circuit bender Joker Nies from Cologne and laptop player Mario deVega from Mexico City. The CD will use live excerpts from our recent 11-city tour in Europe, with the material centered around the rhythmic elements of our music. I am also gradually introducing grooves derived from my guitar sounds into my solo music, although I’m still looking for a musically satisfying solution to control the grooves with my guitar.

I also need to edit the material for my Choking Disklavier project. I am not fond of pieces that boast the “superhuman” capabilities of the Disklavier or other musical machines but instead look for their particular sound qualities. For my project, I overloaded the piano with data at the lowest possible velocity, so that the hammers do not reach the strings. Low rumbling and crackled noises emanate from the machine – often very rhythmically – with occasionally a string ringing on top.

Then I have a new CD out on Innova with my Third Eye Orchestra, a 14-piece ensemble with live sound processing, where I wrote a large score but arrange it live, Earle-Brown-style. You might not hear it that way, but I feel it’s just the continuation of my guitar work by other means. The next concert will be in March 2009, and I’m already collecting ideas for the next score.

Endangered Guitar Max/MSP Patch

Endangered Guitar Max/MSP Patch