ENDANGERED GUITAR IN:

MUSICAL INSTRUMENTS IN THE 21ST CENTURY

I am happy to announce that my "Case Study: The Endangered Guitar" is included in a book published by Springer Singapore: Musical Instruments in the 21st Century - Identities, Configurations, Practices. Editors: Bovermann, T., de Campo, A., Egermann, H., Hardjowirogo, S.-I., Weinzierl, S. (Eds.). (c) 2017. See the link here.

Read my contribution in full below. For online reading, footnotes are at the end of each chapter, links are inserted, with book references at the bottom of the article. Links were accessed August 2016.

Updates to the text - there have been major revisions to the software in 2017 and 2018 - are at the bottom of this page, plus a video with an excerpt of my solo contribution at the symposium's concert event Berlin 2016.

Over 400 pages, 95 illustrations, 25 articles by a wide range of scholars and practitioners. Download the table of contents as a PDF here.

By exploring the many different types and forms of contemporary musical instruments, this book contributes to a better understanding of the conditions of instrumentality in the 21st century. Providing insights from science, humanities and the arts, authors from a wide range of disciplines discuss the following questions:

- What are the conditions under which an object is recognized as a musical instrument?

- What are the actions and procedures typically associated with musical instruments?

- What kind of (mental and physical) knowledge do we access in order to recognize or use something as a musical instrument?

- How is this knowledge being shaped by cultural conventions and temporal conditions?

- How do algorithmic processes 'change the game' of musical performance, and as a result, how do they affect notions of instrumentality?

- How do we address the question of instrumental identity within an instrument's design process?

- What properties can be used to differentiate successful and unsuccessful instruments? Do these properties also contribute to the instrumentality of an object in general?

- What does success mean within an artistic, commercial, technological, or scientific context?

CASE STUDY: THE ENDANGERED GUITAR

Hans Tammen

The “Endangered Guitar” is a hybrid interactive instrument meant to facilitate live sound processing. The software “listens” to the guitar input, to then determine the parameters of the electronic processing of the same sounds, responding in a flexible way. Since its inception 15 years ago it has been presented in hundreds of concerts; in 23 different countries on 4 continents; in solo to large ensemble settings; through stereo and multichannel sound systems including Wavefield Synthesis; in collaborative projects with dance, visuals, and theater; and across different musical styles. It is well developed, so it is time to describe the history of this instrument, and to look at the paths taken and the paths abandoned. The latter are equally significant, because some of them represent musical approaches that, however important, nevertheless ran their course at some point. One needs to remember that there was a musical concept behind every change in the instrument, and as I changed as a composer, my ideas changed as well. There is no difference between the Endangered Guitar or my works for chamber ensemble: some techniques stay at the core of my work, some others stay for a few years, others do not last for more than one piece.Today I call my setup the Endangered Guitar when the following tangible components are present: (a) the actual guitar, of course; (b) the specific tools and materials that facilitate sonic progression; (c) a computer. However, there is more to the computer than mimicking a guitar effects board. Instead the software is part of an interactive instrument. 30 years ago, Joel Chadabe described the consequences as follows:

”An interactive composing system operates as an intelligent instrument — intelligent in the sense that it responds to a performer in a complex, not entirely predictable way adding information to what a performer specifies and providing cues to the performer for further actions. The performer, in other words, shares control of the music with information that is automatically generated by the computer, and that information contains unpredictable elements to which the performer reacts while performing. The computer responds to the performer and the performer reacts to the computer, and the music takes its form through that mutually influential, interactive relationship. The primary goal of interactive composing is to place a performer in an unusually challenging performing environment." (Chadabe 1984)

These are the concepts that constitute the Endangered Guitar:

- It is a hybrid instrument, in that it needs both parts, the guitar and the software, to function and sound the way it is intended.

- It is interactive, in that the software responds in a flexible way to the guitar input, and both performer and software contribute to the music.

- It is unpredictable in its responses, because it designed for an improviser.

- It is process, in that it is not conceived and built at a single point in history, but developed over 15 years, with no end in sight.

- It is modular, because programming in a modular way allows for rapid changes in the structure.

What follows is a history and description of its components as they relate to the concepts mentioned above.

(Endangered Guitar Performance Photo by Matthew Garrison 2012)

The Instrument As Process

The Endangered Guitar is made for me as an improviser operating in a variety of musical situations (1). I am interested in a variety of artistic expressions, collaborations and presentation modes. One cannot foresee what a specific musical situation requires the software to provide, so I cannot foresee what I had to program into the machine.

The Endangered Guitar is a continuing process, and even this account is just today’s snapshot. It grew naturally out of a 25 year long musical development, and followed (and in turn also influenced) my musical paths for the following 15 years. It was not even planned to be “hybrid” or “interactive”, in fact at the time I started working on it, I had no concept of these terms in relationship to my music. So how did it come to that (2)?

(1) I do not pretend that the instrument is designed for ALL cases, because there is music I am not interested in.

(2) I can’t resist bringing up Joel Chadabe’s observation: “I offer my nontechnical perception that good things often happen — in work, in romance, and in other aspects of life — as the result of a successful interaction during opportunities presented as if by chance”. (Chadabe 1984)

Background

(Pre-Computer Performance Photo 1999)

(Pre-Computer Performance Photo 1999)In the 1990s I moved towards improvising in the “British Improvisers” style (1), which provided the right framework for my sonic interests. It requires quick reaction in group settings, transparency of sounds, and fast shifts in musical expression (2). My guitar approach became very much similar to Fred Frith’s: using sticks, stones, motors, woods, mallets, Ebows, measuring tapes, vacuum cleaners, violin bows, metals, screws, springs and other tools I could lay my hands on to coax sounds from the instrument. Not a single hardware store could escape my search for the perfect screw, the perfect spring. During this period the guitar was lying flat on my lap, and was treated as an elaborate string board, often equipped with multiple pickups. Adding a small mixer allowed for tricky routing of signals through the occasional guitar pedal.

The sonic range I achieved can be best heard on my 1998 solo CD “Endangered Guitar”, released in a metal box on the NurNichtNur label. That’s also when I coined the term - I just needed a title for the CD. I felt it was a break with the traditional notion of the guitar, but not so far as to call it “extinct”, the guitar being just “endangered” (3).

(1) I listened to this music as soon as Music Improvisation Company's LP came out on ECM in 1970. But I started to understand its implications when I read Derek Bailey's account in the 1987 German translation of the first edition of his book "Improvisation: Its Nature And Practice In Music" (Bailey 1987).

(2) I can illustrate those musical interactions best with our quartet recording from 2000, Kärpf.

(3) In light of the guitarists who came forward since Rowe and Frith were using those techniques (especially the explosion in the 1990s), it is safe to say that playing the guitar with knitting needles or alligator clips is just another traditional guitar playing technique.

Adding Max/MSP

Around the same time home computers became fast enough to allow digital audio, and laptops became affordable, so they could be carried around to gigs. I had worked already with notation and other music software on the Atari when I got one in 1986, so I was no stranger to using computers for music. When the prospect of bringing a laptop on stage instead of all the cables, pedals and mixer, the idea of moving everything onto the computer came to me quite naturally.

The software I used was Max/MSP. That decision was a natural one, too: my soon-to-be wife, Dafna Naphtali, had already worked with Max for about a decade, controlling an Eventide H3000 to do live sound processing on her voice and acoustic instruments. She suggested to not look into ready-made applications, but to write my own. Since I had written business applications (1) some 10 years earlier, writing my own music application wasn’t such a strange idea, and so she gave me a head start with node-based programming. It took about 2 years to get it comfortably working: I started programming Max in 1999, did the first performances with the software in 2000, and performed primarily with the guitar/software instrument by 2001 (2).

Originally the goal was just to transfer the physical setup into the digital realm. I have worked for 14 years at Harvestworks Digital Media Arts Center in New York, overseeing projects of clients, students and Artists In Residence, and the wish of simplifying one’s setup by moving it onto the computer was often the first goal.

While this was the plan, it was out the window as soon as I started experimenting. I did own LiSa already, Michel Waisvisz’s Live Sampling Instrument (3) distributed by STEIM. I had some fun with it, but only when I connected my MIDI guitar to Max, which in turn controlled LiSa, it all of a sudden made sense. The basic idea of LiSa - cutting up audio, playing the material at different speeds and in different directions (4) - became the core of the software written in Max - and that had nothing to do with the original intent of using a computer. As for LiSa, after a few months I figured out how to move its capabilities into MSP.

I moved to New York in January 2000, and over the years my musical interests shifted towards longer musical developments, wider dynamic ranges, minimalistic concepts, and rhythmically a shift back from “pulse” to “groove” - all of it had its impact on the Endangered Guitar.Next I will describe the major elements of the Endangered Guitar. Screenshots I took from various interfaces over the years suggest that the core approach to the software has been established by mid-2005. Meanwhile the guitar was usually lying flat on the table, Keith-Rowe-style. This allowed me to focus entirely on sonic progressions by completely obliterating traditional guitar playing techniques (5), using both hands independently (including better access to the occasional sensor), or operating the laptop keyboard with one hand while simultaneously using the other on the guitar.

I have to stress that the Endangered Guitar is still an instrument not designed to work with melody and harmony, instead to focus on sound and timbre, rhythm and dynamics. Pitch does concern me, since every sound has pitch (or numerous pitches). I do play with this, of course, but the main purpose is to organize sound in time.

(1) Ask me about database applications for undertakers, orthopedic shoemakers and for those who sell colostomy bags!(2) See my mini-CD release on NurNichtNur, recorded June 2001 during a tour in France: “Endangered Guitar Processing”.

(3) It is strange that I can’t find a proper link to this groundbreaking software. STEIM has a successor to LiSA, and you may get some information about Waisfisz’s approach from their RoSa page.

(4) It is not a coincidence that it sounded like Teo Macero’s application of an echoplex to Sonny Sharrock’s solo on Miles Davis’ Jack Johnson album. I always wanted to sound that way, and Sonny Sharrock and the music of Miles Davis’ Bitches Brew period belong to my earliest Jazz influences.

(5) The occasional tapping not withstanding.

Core: Sample Manipulation

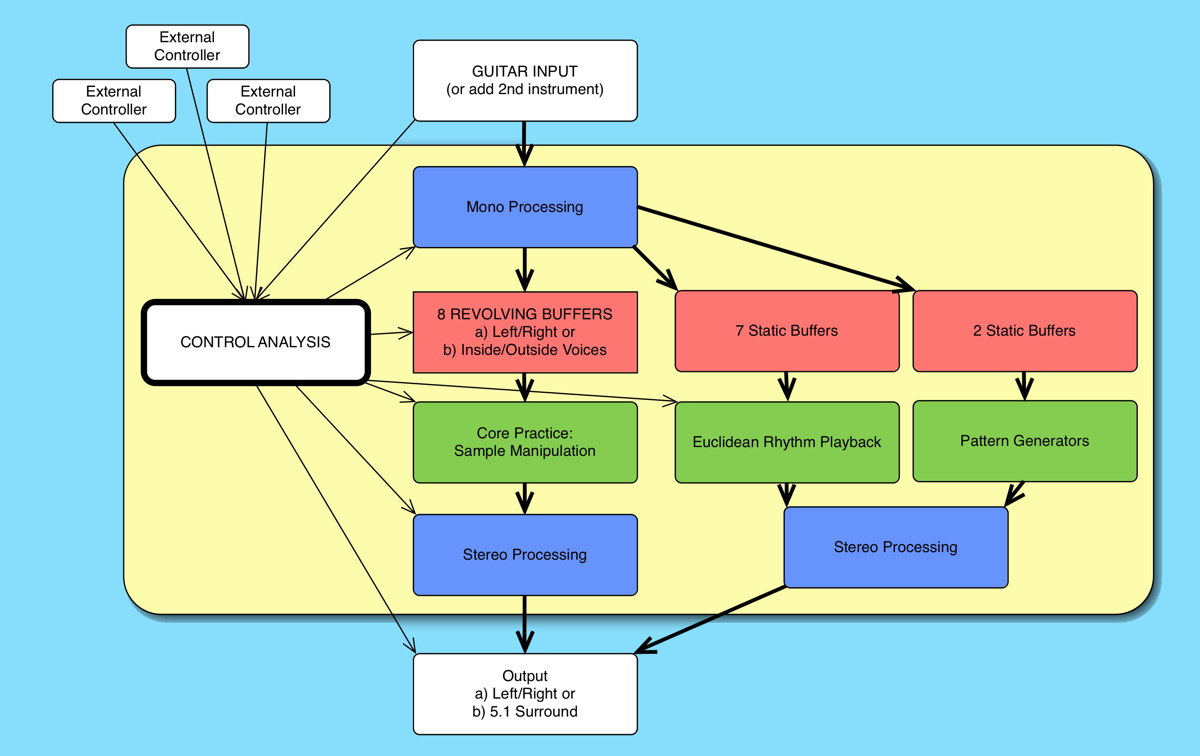

For an overview of the signal routing and the components of the software see the figure below:

Audio is recorded into “revolving buffers” that are constantly filled, means recording starts again from the beginning when the end is reached. Of course, the buffer can be played in different directions and speeds, but pressing the TAB key syncs up both recording and playing “heads” in a way that one perceives it as the audio passing through. In fact, I had implemented a bypass routine for a while, but got rid of it after a few years when I realized I didn’t need it.

Originally I used MIDI guitars to control the software, but they were abandoned by 2004. I figured out how to work with Miller Puckette’s fiddle~ object, allowing for direct pitch and velocity analysis of the incoming sound. This freed me from the need for specific guitars, pickups, and MIDI interfaces. Plus, advantages of MIDI guitars such as providing information about string bending did not yield significantly different results on a musical level.

I use pitch and velocity information from fiddle~, e.g. to affect the speed of the “playhead”. Higher notes than C (on the B-string) result in increasing speed in similar intervals, lower notes decrease speed. With arrow keys the “spread” can be increased from semitones (C# plays a semitone higher) up to a double octave (C# plays the buffer two octaves higher). To work with microtonality, the “spread” can be used to dividing the octave from 12 into 24 equal parts. Specific settings allow for working directly with granular synthesis approaches.

I use often a buffer length of 2 seconds, but it can be in a range from 1ms to 15 seconds. When speed is changed, audience and other players often notice a considerable delay between playing and the audible result. What setting I choose depends on how fast I need to interact with others: if quicker interaction is required I go to shorter buffer lengths, but in solo situations I sometimes use the entire range.

In the very beginning I also experimented with other ways to use data from the analysis, such as moving average. However, if I didn’t hear an immediate change as the result of my playing, I felt I lost control. I will later come back to this, because eventually “losing control” I consider today one of the main components of this hybrid instrument.

Audio Inputs

Originally the input was a single guitar sound coming in on the left channel. The right channel has subsequently been used for a second pickup mounted on the guitar’s headstock, built-in piezos, audio from the second neck of a double-neck guitar, additional piezo strips on the table, or electronics such as Rob Hordijk’s Blippoo Box. Eventually this lead to input from other live players (sound poets, hyperpiano, violin, percussion, string quartet, etc.) (1).

Each channel’s analysis and sound can be turned off, to the extent that e.g. the guitar is just the controller for the violin processing, or the other way around.

The sound is then fed into a row of processes. As of this writing, I use bit and sample rate reduction, freeze reverb, ring modulation, plus an assortment of VST plugins (2). As with many routines over the years, some processes ran their course and have been abandoned after a while, such as convolving different audio streams.

This section is “mirrored” after the sample manipulation unit, so I can apply processes to the input, the manipulated audio, or both.

(1) “Hyperpiano” is Denman Maroney’s term for his approach to the piano. A few of my live sound processing projects can be explored on my website.

(2) I am fond of GRM Tools - I may have been able to replace some of those with my own programming, however, I am not sure it would ever sound that good!

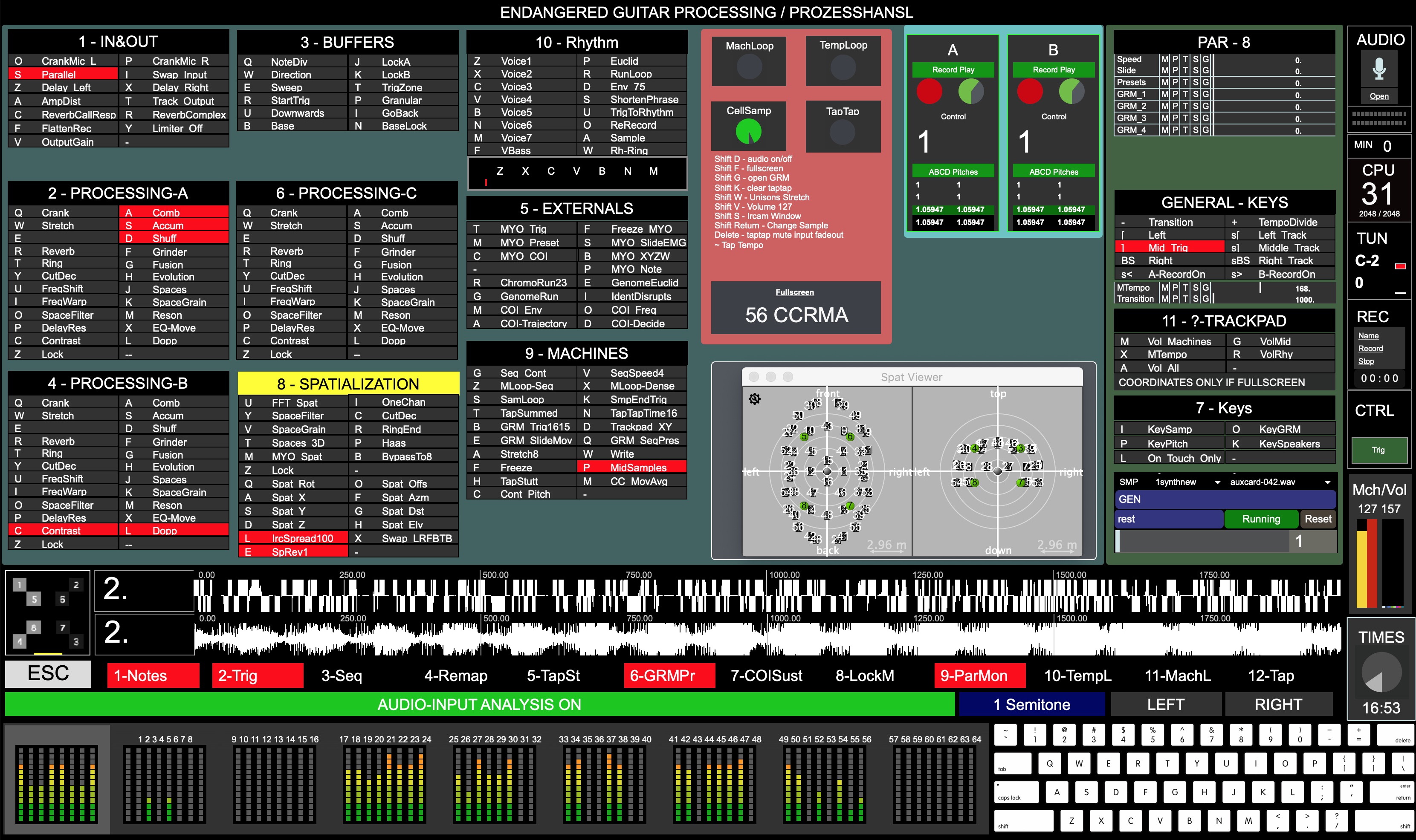

Modularity

The “mirroring” is easy to implement because Max/MSP offers many options to program in a modular way - each instance of the effects group can be seen as being recreated from a template (1). Those files, once defined, can be used numerous times in the software, and this makes for a very modular system that can be changed rapidly. The Endangered Guitar software is constructed from over 200 files, nested within each other up to seven levels deep. What you see in the heavily color-coded interface is only the “container” patch (2). This may sound complicated, but the hours spent on that kind of preparation have saved me weeks of hunting down multiple copies of the same routine.

Where this system really shines is when I introduce new approaches. Once the main routine is written, it just needs a few arguments. It will be automatically included in the top interface, hooked up to the various levels of the reset system, and having its parameters connected to all the modes of control (values from audio analysis, external sensors and controllers, etc.). Hooking up an external sensor is easy as well, besides being included in the system as explained, its output can be routed to all parameters available by setting arguments.

As for controllable parameters a cursory check reveals that the system is currently set up to control approximately 100 parameters across the entire system. Any incoming control value could be routed to any of these parameters, but of course nobody could actually control that amount of data. For the sake of modularity and standardization (and because I do not know what I may need tomorrow) these control channels are theoretically available. However, many of them I haven’t used in years, some I certainly have forgotten about. In fact I barely use 10% during performances, and what helps is that the interface only shows parameters that are actually in use.

This approach emerged from the notion of the “instrument as process” - in fact, there have been times in my life where I was making changes and adjustments every single day, even after performances late in the hotel room.

(1) In Max/MSP parlance, I use bpatchers, poly~ objects and abstractions.

(2) The interface is entirely done with bpatchers - Max’s presentation mode wasn’t out yet.

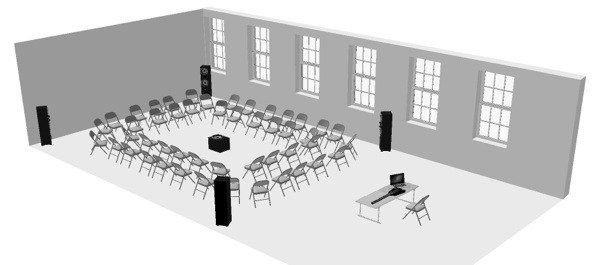

Multichannel Sound

Outside vs. Center 2006)

I have used this approach in very different setups, with the single speaker moved into the geographical center, or to the back of the audience, into an adjacent room or hanging from the ceiling (1).

(1) A list of approaches can be found on my website.

Euclidean Rhythms

I was adding a “drum computer” setting from about 2005 on. There were various experiments over the years, even using drum samples, or convolving drum sounds with the actual guitar input. Eventually I settled on the Euclidean Rhythm concept as outlined by Godfried Toussaint, with sounds recorded on the fly during performance. I like Euclidean Rhythms not because generating rhythms out of two integers should be appealing for computer programmers, but rather because my works tend to use ostinati, with phase techniques superimposing different odd meter rhythms. Toussaint’s concept just yielded the best results. I create up to 7 different of these rhythms of different lengths, distributed in stereo or quad space. Sounds we hear are taken from various points of the signal chain, so the rhythms can be seen as another way of live sound processing. Materials are recorded into static buffers, so they only change when I need them to. Euclidean Rhythms are also used for two additional pattern generators, that I predominantly use in multichannel setups to create immersive environments.

These pattern generators are followed by another instance of the effects processing section, as described earlier, to process them the same way.Sound Analysis as Control Source

The main tool for the analysis of incoming sound is still Miller Puckette’s fiddle~ object. There may be better options for pitch tracking these days, but I have a decade-long experience “playing fiddle~”. I have a good (albeit often sub/unconscious) understanding of the way it behaves, such as the chaotic data it provides when I use my steel pot scrubber. I know when it reports a new “event” depending on how hard I hit the strings, or which mallet I use. Replacing fiddle~ with something else feels I’d have to learn to play my instrument all over again.

(Tools, 2-channel Guitar and Computer Setup 2007)

I have used FFT analysis at some point, to draw information from the different overtones, but this had been abandoned at some point since it didn’t make much difference musically. Currently I only use pitch and velocity information, and “event” (which depends on how long the volume has to go down for the object to report a new action).

External Controller Input

Over the years I have experimented with numerous external controllers as well, some of them disappeared quickly, others were in use for years. The two most successful ones were an infrared proximity sensor and the iPhone’s accelerometer. As of this writing I occasionally work with the Leap Motion controller.

The use of a proximity sensor had an interesting origin. Since I move body and hands in sync with the music, I often got asked what parameters I would control with my hand motions. But there weren’t any sensors, it was just the music that moved me. However, it gave me the idea to use proximity sensors on the instrument. Alas, on the first test it became clear it wouldn’t work - music moves my hands, not the other way around. What eventually made sense musically (and I have played with that setting for about 5 years) was to situate one sensor in front of me, and to move my head in and out of the infrared beam. I used it to turn specific routines on and off, which allowed me to create a third action when both my hands were busy working on the strings.

Using the iPhone accelerometer does come quite natural to a guitarist. I held it in my left hand, and a metal phone case allowed me to utilize it in similar ways to a pedal steel bar. As with other controllers, the data was routed to affect a variety of parameters. I have used this controller for about 3 years (1).

(1) You can see an example in the first few seconds of this concert video from 2010.

Unpredictability

One of the main components of the software is to deliberately program unpredictable or “fuzzy” elements into the software. Before I was even working on the software I was aware that my improvisations became predictable if nothing surprising happened. I simply play better if I have to struggle with unforeseen situations, and when something happens that keeps me on the edge of my seat.

A “source of uncertainty” (1) can be just the way the room acoustics respond to specific frequencies, in that material played yesterday elsewhere doesn’t sound good today. It could even be strings or sensors breaking, the machine crashing (2), accidentally pressing the wrong key, etc. To facilitate some kind of change I was already limiting the tools I brought to the gig to whatever fit in my backpack, so sometimes it was a little arbitrary what I had available at the concert.This is also the reason why there are no presets in my software. While it is easy in Max/MSP to implement a preset structure, I have thrown it out quickly. I noticed I used presets in situations where I wasn’t sure what to do next musically - and jumping to some preset was an easy way out. I had already experienced the same situation 10 years earlier when I used loop pedals: if I didn’t know how to get out of the current situation, I started a loop. The results, though, were never satisfactory, eventually the preset structure had to go (as well as the loop pedals a decade earlier).

That does not mean that what I play is “new” in the sense that you have never heard it before. Sometimes this is the case, but to characterize my performances I would like to offer Earle Brown’s understanding of the term “open form composition”, in that the parts of the composition are arranged differently with every new performance (3). While the performances are not planned in a way that I determine how to start and end, and what to do in between, there is an enormous amount of practicing and planning behind each individual part when it is presented in performance. The sounds I coax from the guitar draw on 25 years of experience, the use of the software rests on 15 years of practicing (4). The improvisational element concerns the choice of the actual sonic material (especially with regards to the sound of the room and the sound system), order and timing of parts, how to transition from one part to the other, when to make sharp cuts, and dynamics and other elements of the form - and as well dealing with the occasional technical and musical failure.Improvising is here merely the technique that helps us dealing with the unforeseen. Bruce Ellis Benson tries to depart from the usual binary scheme of composition/improvisation (as it is common in European discourse), by suggesting that improvisation is when one ventures into the unknown, something both composers and performers do (5) (Benson 2003). I do not want to put too much weight on this, because in 2016 it should be obvious that these terms can be used to describe cultural notions, but they are not useful to describe what we actually hear. However, I do feel the notion of improvising as a technique to deal with unpredictability does accurately describe one of the main ingredients of my performances.

But how does one achieve unpredictability when creating software? By deliberately programming it into the machine. First I use weighted random functions. The machine does not react the same way to input (pitch, sensors, etc.) every time. It responds within a range of values, depending on the desired musical outcome. It may go without saying that completely random (as in equal weight for each value) does not yield musically interesting results. Some parameters I have tweaked for years to produce the right amount of fuzziness without becoming arbitrary.Recently I have made increasingly use of feedback systems. Using the analysis of the audio output instead of the input, plus feeding back the audio into the input provided good results. It is quite unpredictable which parts of the spectrum are emphasized and which not, and which provide for good material for the analysis. Here we can additionally benefit from the shortcomings of pitch trackers such as fiddle~, in that feeding material without a fundamental will results in erratic behavior.

From 2005 on I have increasingly made use of these strategies. I relinquish control to the machine instead of seeing it as an extension of my guitar. I play with the machine, not the machine. The amount of unpredictability varies with the project, it has less autonomy in ensemble situations, but in solo performances the Endangered Guitar tends to be this crazy thing I struggle with.(1) I came to like Don Buchla’s use of this term to name a synthesizer module that produces unpredictable control values.

(2) It is baffling (but also attests to the stability of Max/MSP) that in 15 years the software crashed just four times live on stage.

(3) As a method in itself it does not have to be of value - a musician playing the same licks over and over does also, strictly speaking, “open form composition”.

(4) “Practicing” is a good point: I am always amazed by people whipping up some patches up the night before the gig, and then getting lost on stage. Exceptions not withstanding, one does hear lack of preparation.

(5) …thus replacing one binary scheme with the next.

Outlook

What is the future of this hybrid interactive instrument? One development in recent years is that I branched out by using other sound sources, in that I do live sound processing of other instrumentalists (from single performers to whole ensembles). Sometimes I process a tabla machine or a room microphone. Technically it is not the “Endangered Guitar”, but it is still “hybrid” (in that it is comprised of a software plus another instrument), and “interactive” (in that data from the audio input is interpreted in various and unpredictable ways to effect processing parameters).

I am currently extending the software by including an external data set, over 600,000 lines from my own DNA analysis. As before, I hope to struggle with the software’s unpredictability, but this time I would use data from my DNA to influence the “fuzziness” of the machine. There is still some tweaking needed, the piece is aptly called “Conflict Of Interest”. While working with my own DNA data makes certainly sense on a conceptual level, it allowed me to consider working in the future with other data streams as well, such as live input from the internet.Lastly, the biggest challenge would be to develop the instrument into a true improvising machine. In its current stage the Endangered Guitar does not qualify as such, because to be an improvising machine it would need memory - making decisions based not only on the current input, but also based on numerous previous performances. If it is improvising, it needs to react to unforeseen situations, so it would need extensive pattern recognition and machine learning algorithms. This would be an undertaking that requires concentrating on nothing else for a few years.

Listening Example

An Endangered Guitar retrospective was released on the Danish label CLANG in June 2016, containing excerpts from performances between 2004 and 2011. Called “Deus Ex Machina - Endangered Guitar Live”, the music is grouped into various sonic themes, with short pieces acting as interludes in between. All pieces are from the time when the Endangered Guitar became an interactive instrument. Another reason for choosing the works on this release is that these were new sonic universes – by then I had left behind the prepared guitar / noise formula that dominated my playing throughout the 90s, figured out which live sound processing approaches worked best, and incorporated Euclidean Rhythms.

Book References

Bailey, D. (1987). Musikalische Improvisation: Kunst ohne Werk. Hofheim am Taunus.

Benson, B.E. (2003). The Improvisation of Musical Dialogue: A Phenomenology of Music. Cambridge.

Chadabe, J. (1984). Interactive Composing. Computer Music Journal. VIII:1, 23

Toussaint, G.T. (2005). The Euclidean algorithm generates traditional musical rhythms. Extended version of the paper that appeared in Proceedings of BRIDGES: Mathematical Connections in Art, Music and Science. 2005, 47-56.

Illustrations

Figure 1: Endangered Guitar Performance Photo by Matthew Garrison (2012)

Figure 2: Pre-Computer Performance Photo (1999)

Figure 3: Signal Routing Endangered Guitar Software (2016)

Figure 4: Screenshot of Endangered Guitar Software Interface (2015)

Figure 5: Basic Multichannel Sound Setup / Outside vs. Center (2006)

Figure 6: Tools, 2-channel Guitar and Computer Setup (2007)

UPDATES

As explained, the development of this software is open-ended, so there there have been a number of significant updates since the publication of this article. This happened primarily during two residencies at Lucas Artists Residencies at Montalvo in 2017 and 2018, allowing for major upgrades and rewriting of the system’s structure.

-

In 2018 I upgraded the multichannel facilities from my 5.1 surround days 15 years ago and octophonic systems to spatialize sounds in any configuration and placement from 3 to 64 speakers. The system is also scalable, so I am looking forward to even more loudspeakers to play with.

-

For my Conflict of Interest project in 2017 I implemented various routines that allowed for the controlling of parameters from data from my own genetic analysis. In 2018 I implemented a synthesizer as a new sound source to process, whose oscillators are controlled by the same genetic data. I also included a MYO muscle sensor as a new control source, as the 2018 version of the project did not use a guitar.

-

After the initial processing of solely my own guitar, I extended the system to allow for the processing of other instruments (or suitcases). As I am frequently processing more than one instrumentalist, in 2017 I established a double processing structure, in that I can independently analyze and process two streams of audio at the same time.

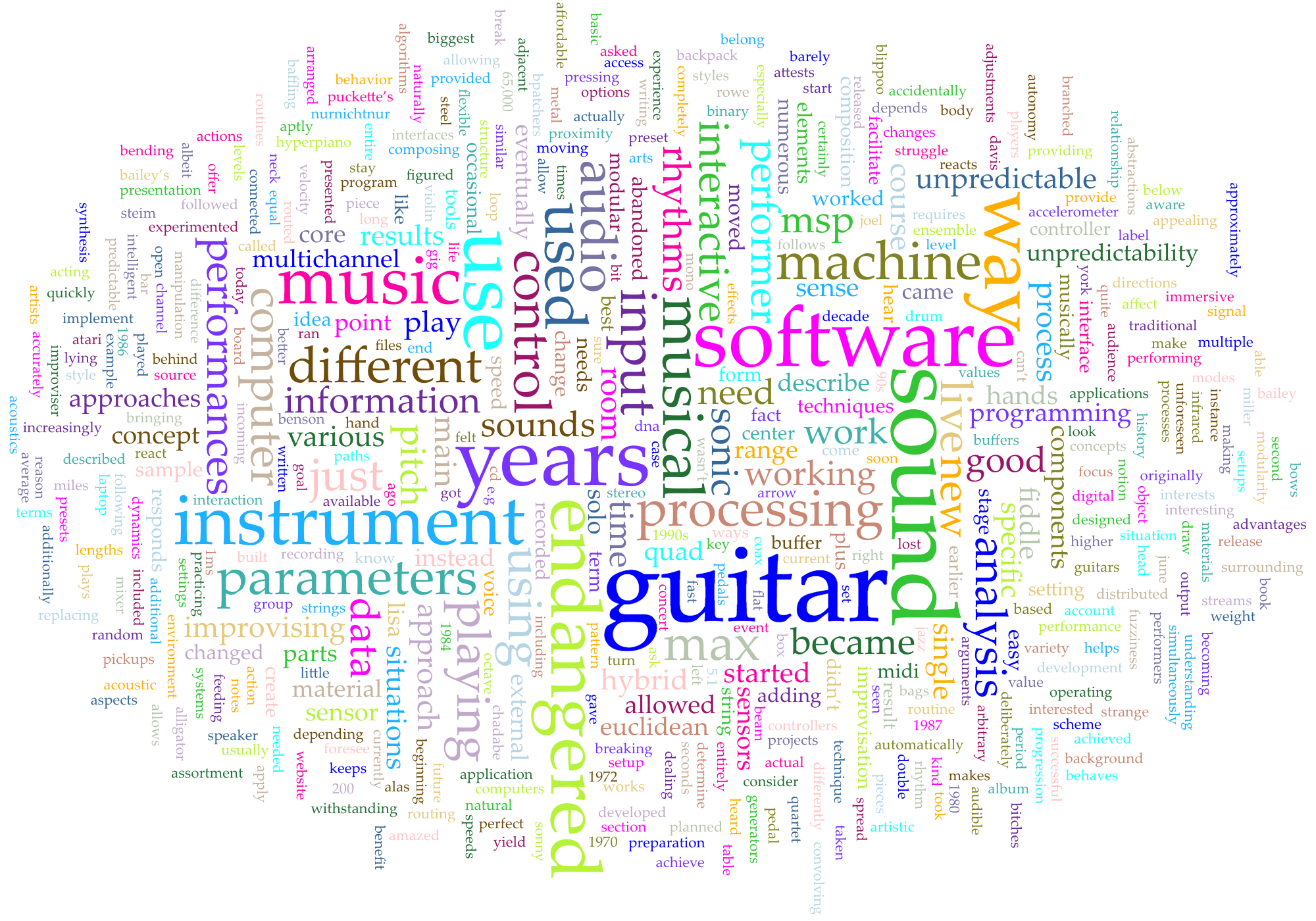

ARTICLE WORD CLOUD

(Performance Berlin 2016 / Photo by Jutta Ravenna)